GPU Computing is a computing method that strives to balance power and energy efficiency, by achieving minimal energy consumption in order for computation to be done locally. It achieves this by focusing on the performance of graphics processing units (GPU), which are specifically designed for performing computations, as opposed to central processing units (CPU).

In today's world filled with data, graphics cards have made it possible to process and store large quantities of data in high-speed memory. You can also navigate Ai-Blox to get detailed information about GPU edge computing.

Image Source: Google

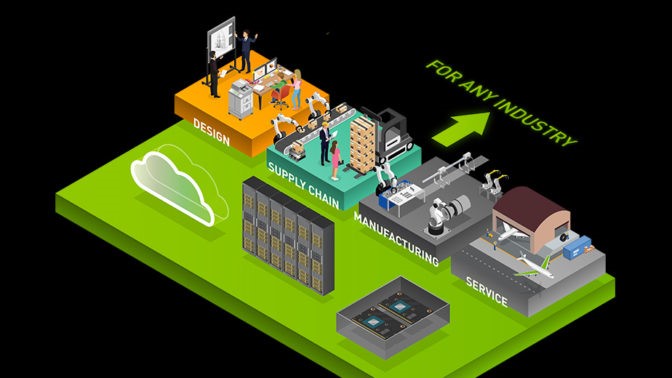

GPU edge computing is a type of computing that uses a graphics processing unit (GPU) to perform data processing and other operations at the edge of a network, instead of in a central location. This can be beneficial for organizations that need to process large amounts of data quickly, or that need to process data in real time.

GPU edge computing can be used for a variety of tasks, including image and video processing, machine learning, and data analytics. It can also be used to power Augmented Reality (AR) and Virtual Reality (VR) applications.

GPU edge computing has several advantages over traditional CPU-based edge computing. For one, GPUs are much faster than CPUs when it comes to processing data. They can also handle more complex computations than CPUs. Additionally, GPUs are more energy-efficient than CPUs, which can help reduce costs.

There are some challenges associated with GPU edge computing, however. For example, GPUs can generate a lot of heat, which needs to be managed carefully. Additionally, setting up and managing a GPU-based system can be more complex than a CPU-based system.

Despite these challenges, GPU edge computing is becoming increasingly popular as the benefits continue to outweigh the drawbacks.